You’ve built the dashboards. You’ve defined the KPIs. But when you open your reports, something feels wrong. The numbers shift, definitions clash, and teams argue about what’s “correct.” You’re not alone - and the problem isn’t your tools or your tagging. It’s your foundation.

When your marketing data model is undefined or misaligned, your reports will keep lying to you, no matter how polished they look. This article breaks down five signs that indicate a broken data foundation and explains how modeling is the real solution.

Marketing dashboards are often the most trusted artifacts in a team’s decision-making process. But that trust is built on a dangerous assumption: that the numbers reflect a single version of the truth. In reality, most dashboards are built on fragmented data models and isolated queries, not a shared, governed foundation.

It’s easy to confuse a visually polished dashboard with accuracy. But if the numbers behind that dashboard are defined differently across tools - or worse, manually manipulated with inconsistent logic - then you’re building strategy on shifting ground. Teams often blame “bad data,” but the actual issue is deeper: broken modeling and undefined metrics.

You open your campaign performance dashboard on Monday. Then on Thursday, someone else on the team opens the same one, and the numbers don’t match. Neither of you changed anything. But the revenue, sessions, or cost-per-lead is suddenly different.

This isn’t a fluke - it’s a sign of broken metric governance. The underlying reason is that metrics are being calculated on the fly, often in different BI tools or SQL layers, using slightly different logic.

For example:

Even if these reports look similar, the queries running behind them vary. The same metric, “conversion,” ends up meaning three different things. So even though the report names and filters are consistent, the outcomes aren’t, and every misalignment erodes trust.

Many teams respond to reporting discrepancies by adjusting the dashboard visuals, such as modifying charts, renaming columns, or fine-tuning filters. But the real inconsistency lies in how data is being pulled, joined, and calculated.

Reporting tools are only as reliable as the data logic behind them. If your pipeline pulls platform-specific metrics, each with its time zones, attribution models, and conversion windows, your dashboards will reflect that mess.

For instance:

Each of these data sources applies its business logic. Without a centralized model to standardize joins, filters, and definitions, your dashboards simply mirror the chaos. Reporting discrepancies, then, are not reporting issues - they are modeling issues.

Without a shared data model, you’re not building reports - you’re guessing. When every tool applies its own rules, your team ends up spending more time validating numbers than making decisions.

A clean, governed data model solves this by doing three things:

Without this foundation, you’ll always be stuck in “reporting therapy” - debugging, explaining, re-justifying - instead of delivering insight.

The symptoms might look minor - a number that feels off, a question from sales about attribution, a dashboard clone someone created “just to be safe.” But these are more than annoyances. They are signs your reporting stack is misaligned, and your model is either missing or broken.

Let’s walk through each of the five most common indicators.

Metrics like "lead," "session," or "conversion" seem universal - but they’re often interpreted differently across tools, teams, and even individual analysts. One team tracks a lead as a form submission, another counts only MQLs, and a third logs any sign-up event. Without a clear agreement, your metrics lose meaning.

What counts as a lead?

This creates massive misalignment. For example, your ad campaign might show 300 leads, while your CRM shows 60. Both are technically correct, but they’re measuring different things. And unless this is documented and agreed upon, teams will continue to pursue different goals.

In the absence of a shared model, individual marketers or analysts create logic ad hoc. They write SQL that filters traffic in unique ways. They use calculated fields in Looker Studio. They tweak GA4 segments to fit campaign needs.

Each version might be valid, but together, they add up to inconsistency. Without a defined layer of metric logic, every report becomes a personal interpretation of what’s important.

When data lacks shared definitions, dashboards cease to be sources of truth - they become subjective narratives. One dashboard says conversions are up. Another says they’re flat. Teams debate the numbers instead of taking action based on them.

This undermines the role of data in decision-making. What you need isn’t more dashboards - it’s a semantic layer where metrics are defined once and used everywhere. That’s how reporting regains its power.

One of the clearest signs your data model is broken - or never existed in the first place - is that your analysts are constantly rebuilding logic. They copy old queries, tweak filters, add custom joins, and rerun the same logic over and over. It’s inefficient, error-prone, and it wastes the one resource analysts don’t have: time.

This isn’t a workflow problem - it’s a modeling problem. Repetition reveals the absence of reusable structures. If logic isn’t abstracted into views, CTEs, or modeled tables, every team has to reinvent it. Over time, even small changes lead to big misalignments.

This is a common pattern - someone needs to add a new region, change an audience filter, or include a campaign dimension. Instead of referencing a shared model, the analyst finds the last working query, makes minor edits, and saves it as “_v3_final_FINAL.sql.”

This behavior is a symptom of two larger issues:

Without reusable views or parameterized components, even basic metrics like sessions, spend, and conversions require rebuilding logic from scratch.

When one dashboard starts returning inconsistent or unexplained results, what’s the most common solution? Someone clones it, makes changes “just to be safe,” and creates a new version. Before long, teams are choosing from five dashboards - all showing different results, none of which are fully trusted.

This proliferation of dashboards is a direct result of poor model governance. Without confidence in the shared logic behind the report, every team builds its own.

Reusable models - whether in dbt, BigQuery views, or Looker Explores - serve as the backbone of scalable reporting. When those don’t exist, analysts are stuck doing repetitive, low-value work.

Instead of analyzing trends, they spend their time:

This is not a technical debt issue - it’s a sign your reporting stack lacks structure at the core.

You launch a new campaign. Fresh creatives, new UTMs, maybe even a new landing page or funnel. Then your dashboard breaks, rows go missing, metrics flatline, and attribution falls apart. This happens not because the campaign was poorly designed, but because the reporting logic was never built to adapt.

Reports that rely on hardcoded logic or assumptions crumble under change. A resilient reporting layer requires flexibility, which can only be achieved through structured modeling.

If your reports assume fixed campaign names, specific UTM structures, or static events, any deviation from these assumptions will skew the numbers.

Suddenly:

This is a sign your report logic is hardwired into the presentation layer, not abstracted in a model that anticipates growth or change.

Marketing teams often treat UTMs as a one-time setup. But in reality, they require consistent enforcement. When teams use lowercase vs uppercase tags, or mislabel source/medium, data collection becomes fragmented.

Poor UTM hygiene leads to:

If your data model doesn’t normalize these inputs at the ingestion or transformation layer, every new campaign becomes a new risk.

In GA4, tracking an event is not enough - its custom parameters must be registered in advance to show up in reports. If your model doesn't account for these updates:

This makes campaigns feel broken post-launch, when in fact, the reporting structure was too fragile to begin with.

This is one of the most expensive signs your reporting model is broken - misalignment between the teams that rely on each other the most. Marketing says they drove 500 leads last week. Sales says only 120 showed up in the CRM. The executive team sees both numbers and doesn’t know who to believe.

This disconnect doesn’t stem from a lack of effort; it stems from a lack of clarity in modeling. When each department relies on different tools and definitions, trust falls apart.

CRM systems and GA4 rarely agree. And they’re not supposed to - they measure different things. But without a data model that reconciles these views, the differences cause confusion and friction.

For example:

Both systems are “right,” but without a model that maps the journey between them, neither helps the business understand performance holistically.

Conversions that show up in your ad platform may never be seen in your CRM. And sales-qualified leads may not map back to specific campaigns. This lack of visibility leads to attribution wars and a breakdown in alignment.

Without a shared modeling layer:

Attribution differences are a core reason for distrust. One team uses last-click, another uses data-driven, and another builds custom logic in BigQuery.

Without alignment:

The attribution problem isn’t just technical - it’s structural. Only a unified data model can apply attribution rules consistently and visibly across platforms.

Disconnected systems, multiple dashboards, and no unified schema create silos. Each team optimizes for what they can measure, but not for what actually drives results.

Only when data from ads, web analytics, and CRM is modeled into a unified customer view can you unlock true cross-functional reporting.

If your analysts are constantly defending numbers instead of delivering insights, it’s time to look at your model. When stakeholders don’t trust the metrics, analysts become interpreters, not analysts.

This leads to burnouts, backlogs, and a complete loss of confidence in what reports are actually saying.

When the conversion rate jumps one week and drops the next, without a corresponding change in business performance, stakeholders start asking questions. And if the answer is “we updated a filter” or “GA4 changed attribution settings,” confidence crumbles.

Without governed, version-controlled metrics, changes feel random, and explanations feel like excuses.

When people don’t understand what they’re looking at, they stop trusting the report. Many reporting tables are filled with ambiguous fields: “event_label_1,” “conversion_flag,” or “qualified_stage.” If the name doesn’t clearly reflect what’s being measured - or if the definition varies by source - stakeholders won’t trust it.

A mature data model includes:

Without this, you force analysts to spend hours each week re-explaining what the dashboard should have already communicated.

When a CMO or VP asks, “Why don’t these leads match last week’s?” - and the analyst has to run three SQL queries just to explain - that’s a system failure.

The analyst should be surfacing patterns and recommendations. Instead, they’re stuck babysitting definitions and debugging dashboards. The fix? Provide them with a well-defined model where metric definitions are codified, transparent, and shared. Only then can analysts shift from defense to strategy.

Most reporting problems don’t come from visualization tools - they come from what happens before the data ever reaches the dashboard. If your logic is spread across ten different queries, your metrics are undefined, and your joins change per analyst, no BI tool will save you.

The solution is simple, but fundamental: model before you report.

Many teams respond to mistrust in reports by adding more dashboards. One for leadership. One for paid media. One for the CRM. One for “just in case.”

However, more dashboards don’t fix misalignment; they multiply it. Every time a metric is rebuilt instead of reused, inconsistency creeps in. Instead of gaining visibility, you dilute truth.

A dashboard is only as trustworthy as the model behind it. Until your logic is centralized in one place, rather than spread across five, adding more charts just adds to the confusion.

A strong model enforces the same logic across every system. Whether you're reporting on GA4 sessions, Meta ad spend, CRM leads, or revenue, a centralized model ensures that all metrics are joined, filtered, and defined consistently.

This doesn’t just prevent mistakes - it creates confidence. Teams stop questioning the numbers and start acting on them. Leadership stops asking “why are these different?” and starts asking “how do we improve this?”

A centralized model turns dashboards into decisions.

Instead of rewriting “Qualified Leads” logic in four different tools, define it once in your model and reuse it everywhere.

This is how scalable reporting works:

The result: analysts spend less time coding. Marketers get faster answers. Executives get aligned views, and your reporting stops breaking every time a campaign changes.

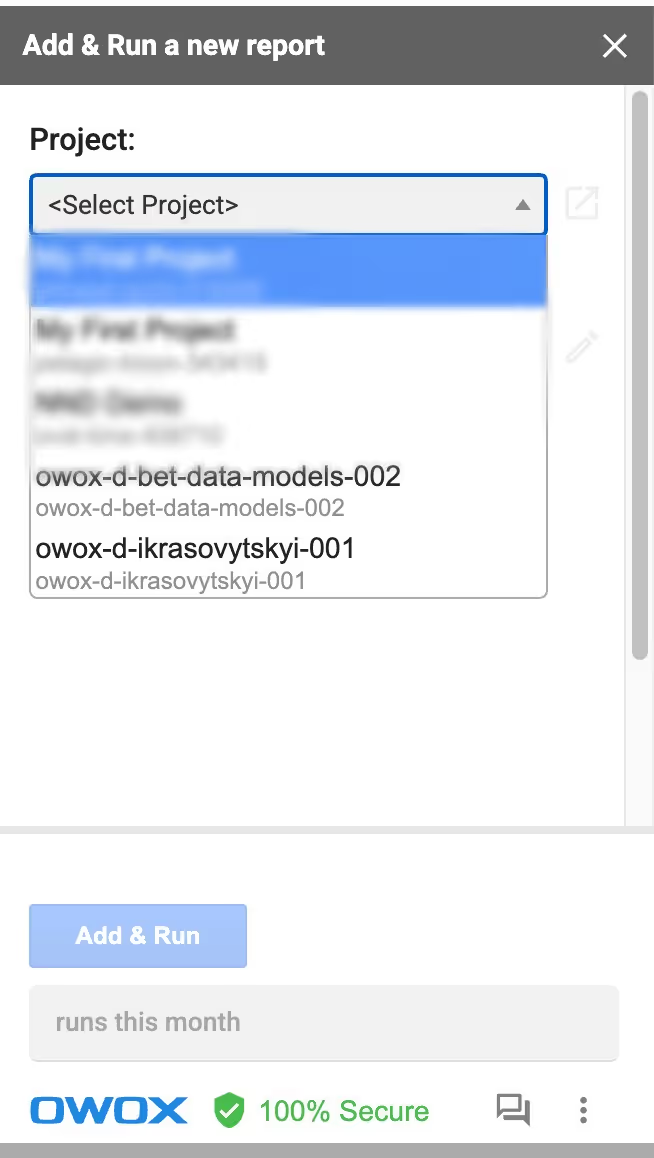

Most tools promise better dashboards. OWOX BI does something better: it fixes what’s underneath.

OWOX BI is a modeling-first platform, not just a dashboarding tool. It comes with a pre-built, GA4-ready data model that gives your team clean joins, trusted logic, and reusable metric definitions out of the box. It doesn’t just make reporting faster - it makes reporting consistent, accurate, and scalable.

OWOX BI isn’t guessing how marketing data works - it’s modeled it already. With over a decade of experience helping marketing analysts build clean pipelines, OWOX BI encodes best practices right into its architecture.

You don’t have to start from scratch. You start with a model that’s already solved the hard parts - attribution, deduplication, metric logic - and build from there.

Need to track leads, sessions, conversions, spend, or touchpoints across channels? OWOX BI comes with those datasets already modeled and ready to use. Each entity-user, session, campaign, channel, and lead is cleanly defined with built-in relationships.

That means less time structuring raw data and more time answering business questions.

OWOX BI gives analysts ownership over metric logic, but makes those definitions accessible to marketers through clear, self-serve tools. No more digging through SQL. No more Slack threads asking “which number is right?”

With metric governance in place, analysts can focus on insights, and marketers can move faster with confidence.

Built natively for BigQuery, OWOX BI integrates easily with the rest of your stack. Push data into Looker Studio, Sheets, or any other tool - all powered by the same clean model. It doesn’t matter where you view the report. The logic stays the same.

That’s what true consistency looks like.

Without trust, even the most beautiful dashboard becomes background noise. No one wants to act on a number they don’t understand or believe. And when every team sees a different version of the same metric, decision-making grinds to a halt.

The problem isn’t the data. It’s the model.

Trust is built when metrics are defined once, applied consistently, and surfaced clearly. Without this foundation, insight is impossible, and data becomes just another argument.

Every marketing team wants to be data-driven. But being data-driven isn’t about volume - it’s about confidence. Confidence that the numbers are right. That everyone is seeing the same thing. Those metrics reflect shared goals and agreed-upon definitions.

Only when this trust exists can teams move fast, experiment meaningfully, and make decisions backed by truth, not politics.

You don’t need to build trust into every dashboard manually. You build it once, in your data model. Then it flows through every report automatically.

This is what mature data organizations do:

Trust isn’t a dashboard feature - it’s a modeling choice.

More BI tools won’t save you. More dashboards won’t align your team. What you need is a consistent foundation - a shared, governed model that everyone uses.

This is where real transformation happens:

Start with the model. Everything else gets easier.

If you're constantly fixing dashboards that break every quarter, spending hours aligning numbers between GA4 and your CRM, or second-guessing every campaign report, it's time to stop patching symptoms and fix the root cause.

OWOX BI gives you a battle-tested, marketing-specific data model that brings structure and trust to your reporting. Define your metrics once. Apply them everywhere. And finally, start reporting like a team that knows what it’s doing.

Different tools apply different logic, filters, and attribution rules to calculate the same metric. Without a centralized data model, each platform defines “conversions” or “leads” differently-leading to mismatched numbers across dashboards.

Start by building a shared data model where key metrics are defined, joined, and calculated in one place. When every team pulls from the same logic layer, reports across tools become consistent by design, not coincidence.

New campaigns introduce unregistered events, inconsistent UTM tags, or new dimensions that static reports can’t handle. A flexible data model helps adapt to campaign changes without manual rework.

CRM systems and GA4 track leads differently, based on timing, interactions, or qualification stages. Without a unified model to reconcile them, you’ll always see discrepancies between source data.

Use a governed data model with clearly defined metrics and consistent logic across tools. This shifts the burden from analysts defending numbers to stakeholders trusting what they see.

No. Adding tools without fixing the underlying logic creates more inconsistencies. The real fix is defining metrics once in a centralized model, so every tool reflects the same trusted data.